Community Engagement is great. It enables a community to contribute their own value-adding activities and pursue a common goal. Whether in the form of corrections, contextual metadata, or entirely new content; contributions from a community are necessary for providing context and relevance to digital collections, and this user-generated content (USG) is vital for a community’s health.

However, with the rise of digital spaces leading to increasingly accessible communities and normalised anonymity – unregulated public access can often lead to unforeseen negative consequences.

We discuss online communities in a previous Recollect resource titled “Grow An Effective Online Community”, where we delve into the methods that can be used to promote an online community; an example of this being cultural heritage institutions, where a community can be formed around the desire for the preservation of specific items and their contextual information.

To learn more about growing your online community we encourage you to read the full resource here, however for this resource we focus on the growing need for moderation, and how the effective moderation of online communities can enable community engagement.

What drives a community?

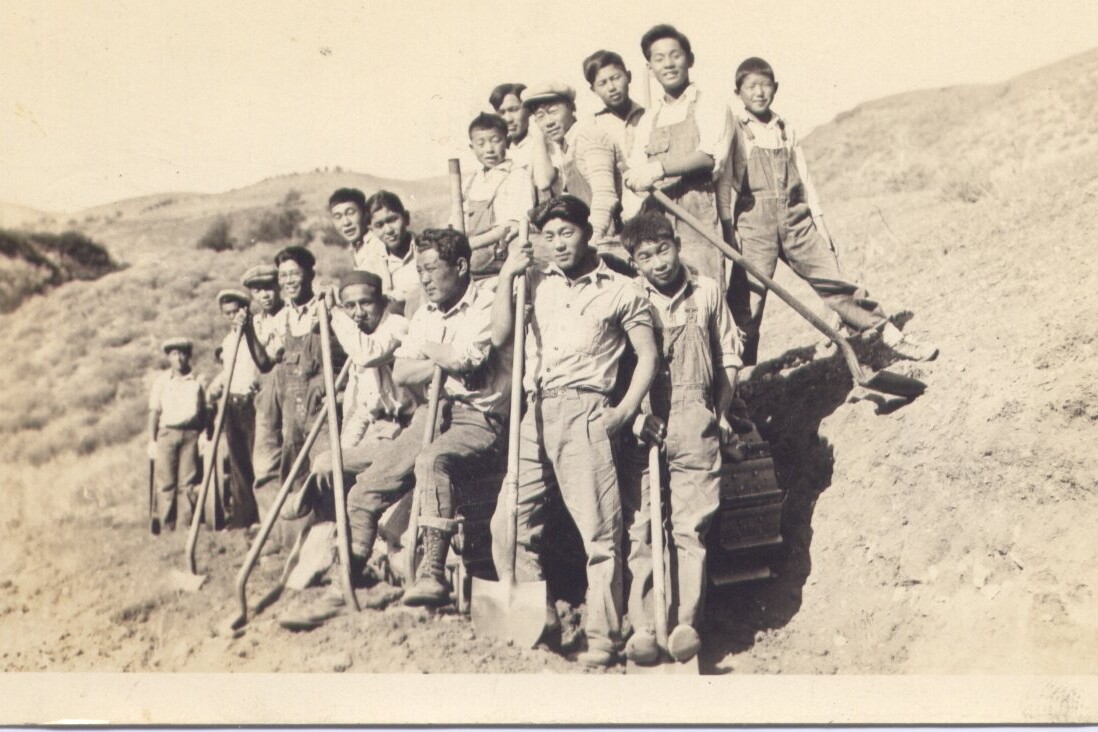

Before understanding the necessity of moderation, we must understand what drives a community. Contrary to the traditional understanding, communities are not specific to local geographic areas. A community can form wherever a group of individuals share a certain interest, attitude, or belief, propelled by the interactions and engagement between members; this community engagement is what drives a community towards its fundamental reason for existing.

Whether they are created due to the traditional need to survive in a geographic region or as part of the surge of online forums, communities are still driven by a particular purpose; a desire to share information, resources and/or experiences between members.

This concept holds strong when placed in the context of online communities. As technology has continued to advance and we now have the ability to instantaneously interact with like-minded individuals around the world, online communities have become a massive part of the digital environment with most individuals likely participating in several each day. This increase in accessibility can be great, as with more positive engagement member relationships will strengthen and contributions will continue to benefit the community.

“Members come together to meet common needs, share their experiences, and contribute to a collective knowledge base” – Grow An Effective Online Community.

Yet if the purpose of a community is to collaborate in the pursuit of a common goal – whether it’s the accumulation of knowledge, shared experiences, or survival – what happens when contributions don’t serve the communities best interest? By intentionally or inadvertently uploading incorrect, irrelevant or disingenuous content, individuals can severely reduce the willingness for other contributors to engage with the community and negatively impact its overall health. The issue of unwanted contributions plagues even the largest online communities.

The solution? Moderation.

Why do we need moderated communities?

For a community to survive, it needs to be healthy. In the context of online communities, this means active positive interaction between members and consistent engagement, with contributions of new ideas, content and information. The need for moderation occurs when contributions are either unknowingly incorrect and under-researched, or potentially harmful and distressing to other community members.

When a member uploads content to an online community that disrupts its purpose, is inaccurate and misleading, or causes distress for the remaining members, the community’s health suffers. Remaining members will be less willing to engage, the purpose of the community may be disrupted, and if not controlled and mitigated these issues will lead to a lack of engagement and potentially the demise of the community.

Disruptive community members contributing incorrect content is a frequent complication that effects online communities, regardless of their size. Not only does the problem not disappear once a community is large enough, but the issue of unwanted contributions actively increases along with its member count; a trend that has been observed with large online communities such as Facebook, Twitter, and Instagram.

The lack of moderation is a significant issue, and with a growing number of internet users it’s only becoming more important.

Moderation provides a community administrator with the ability to manage and review user-generated content and contributions to their community, identify which content is likely to be incorrect, misleading, harmful, disruptive, or irrelevant, and to remove this content before it impacts the health of a community.

Effective moderation becomes particularly significant when a community is representing an entity such as a company, an organisation, or a brand.

What does effective community moderation look like?

Moderation must match a community’s objective

We’ve discussed how under-moderating a community can be damaging, but excessive moderation can lead to the same issues with severe consequences. Community administrators must adopt a method of moderation tailored to their community’s purpose.

For example, if a community’s purpose is academic where members share knowledge and discuss ideas around a specific academic pursuit, a high degree of moderation and filtering may be needed to make sure the community’s purpose is not disrupted. This includes monitoring the community for content that is incorrect, disingenuous or misleading to ensure the remaining community members are receiving correct, factual content.

If a community’s purpose is social, a different approach to moderation should be considered. Members of this community have the purpose of building social relationships and creating connections with other members – a more ambiguous and less specific goal. With this, community administrators should permit flexibility in community contributions, focusing only on removing specific harmful and distressing content. If over-moderation occurs, community members may be less willing to contribute and engage.

Safe spaces for users to contribute.

Whether for an academic pursuit or for social browsing, members have the right to expect a safe space for them to contribute to their community.

This is particularly relevant when in the context of a branded community. The responsibility for ensuring an online community is safe and free of distressing content falls on the community administrator, and where this entity is a brand or institution, failing to effectively moderate their online space and create a safe environment for users can have huge consequence.

If an institution such a university has created an online community for academic pursuits, then that university is responsible for the content allowed on the community. Although most of the members will use the community to share knowledge and discover new ideas, there is always a risk of contributions being incorrect or harmful. If this occurs, the organisation will suffer damages to their brand image, and will be perceived as unreliable and incompetent.

The lack of control over contributor intentions and the resources needed to effectively moderate a brand community is why many organisations choose to utilise a dedicated online community engagement platform, such as Recollect.

A purposeful community.

A community does not necessarily need a definitive purpose; if fact, communities are usually aimed at serving a long-term purpose without a specific resolve, such as traditional local communities being required for ongoing survival or an online community sharing information about a niche interest. With communities now able to form without the need for locality, modern communities are significantly more complex with a significantly broader range of purposes.

“The cutting edge of scientific discourse is migrating to virtual communities, where you can read the electronic pre-preprinted reports of molecular biologists and cognitive scientists. At the same time, activists and educational reformers are using the same medium as a political too. You can use virtual communities to find a date, sell a lawnmower, publish a novel, or conduct a meeting.”

– Howard Rheingold, The Virtual Community

An active, healthy community will have members contributing relevant content to drive the community’s purpose whatever it may be. With effective moderation, engaged members are able to post relevant content that is not undermined by other users posting inaccurate or extraneous content and altering the communities focus in a safe, shared online space.

Moderation on the Recollect platform

Moderation is not only necessary for removing unwanted incorrect and potentially harmful contributions but is a vital tool in encouraging a community to engage in a safe and respectful manner.

As community engagement is a core focus of the Recollect platform, the implementation of enhanced moderation features should come as no surprise. Trusted by organisations whose brand image is of significant importance, Recollects online digital collections and community engagement platform provides moderation tools with the versatility to tailor moderation to your audience’s needs.

With this focus on community contributions, Recollect allows administrators to select how they want to use moderation. Administrators are able to control whether contributions made from community members publish on the website automatically – tailored for a private, gated online community -, requires moderator approval prior to the content being published, or allows some contributions to publish automatically while requiring moderator approval for others.